How to develop a voice skill in 4 steps

An increasingly digitized world means that we may actually be spending more time with our devices than we do with each other. Will Voice User Interfaces (VUIs) eventually become our primary means of interaction with brands and products?

As a study from Farner and the University of Lucerne reveals, 37% of the Swiss people already use voice features. Although only 1% of the population uses Smart Speakers, 13% are planning to get familiar with Smart Speakers in the future.

Even though those numbers are rather low, companies should start dealing with voice interfaces because it will be a future technology. Developing for voice interfaces is much like developing an application for any other platform but like any new platform it has some special terminology and considerations to be taken into account.

Here is a 4-steps guide about how to develop a voice skill:

1) Define the basics

First, think about the value proposition: What problem do you want to solve? What advantages brings the voice skill for your specific product or service compared to other interfaces? Then, conceive a system and a user persona. The system persona is the conversational partner created to be the front end of the technology that the user will interact with directly. As a counterpart, you would also need a user persona. User personas are fictional characters that represent people from the target group with specific traits and behaviour patterns. Before you can write a dialogue, you have to have a clear picture of who is communicating. Next, define the goals of the user: What is he/she expecting from using the voice skill? Take also in consideration the context of the persona: Is he/she at home, in public, in a specific situation, in a hurry, etc.?

2) Design a Conversation

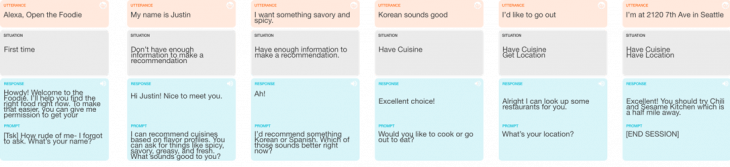

The most difficult part is probably to design conversations with technology that feels as human as possible. Humans are not going to change how they talk because of a new technology. In order to start with your conversational design, set up your team and decide on the roles your team members should have (system, user, note taker). Empathize with the system persona, the user persona and her / his goals and context. The conversation always starts with an opening question or invocation (e.g. What’s the weather forecast for today?). Think of different scenarios and ask questions that lead to a limited set of possible responses, so that errors can be avoided. Next, create a sample dialogue acting it out with your team like a movie script and document it on paper. Create a dialogue as natural as possible. Also, don’t forget to develop an error handling strategy. Think of possible errors that could occur and how you would solve them. (Hint: In conversations, there are no errors. Treat them as input as if there wasn’t any error.)

3) Build a Prototype

The first step of building your prototype is to take the dialogues from the conversation design and convert them into storyboards. (Note: We based our skill on Amazon Alexa)

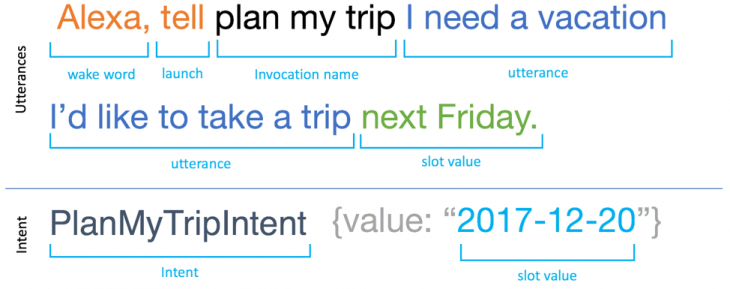

Now you need to connect these to the backend logic of any voice interface – this is commonly referred to as “interaction model”. It is where you implement the logic for the skill, and you also define the voice interface through which users interact with the skill. Be aware of the differences between utterances, wake words, invocation name, and slot values which may all result in one specific intent that is “open a specific voice skill or app”. See a good example below:

To start an interaction, users might say a specific invocation name (=name of the skill), e.g. “plan my trip”. Sometimes a skill is triggered without that specific invocation name. Instead, users invoke the skill by related utterances like “I need a vacation” or “I’d like to take a trip”.

This message can carry additional information in the form of “slots”. These are a sort of variables in the phrase that the voice assistant recognises. For instance, in the phrase “Get me the weather in Bern.”, the word “Bern” could be replaced with a slot called {Swiss_Cities}. When Alexa hears the phrase “Get me the weather in” followed by the name of a Swiss city, it will pass the name of the city to the backend as well.

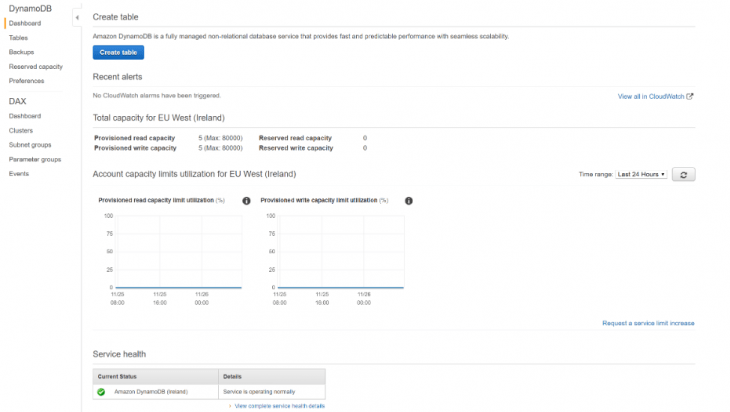

4) Implement the Skill

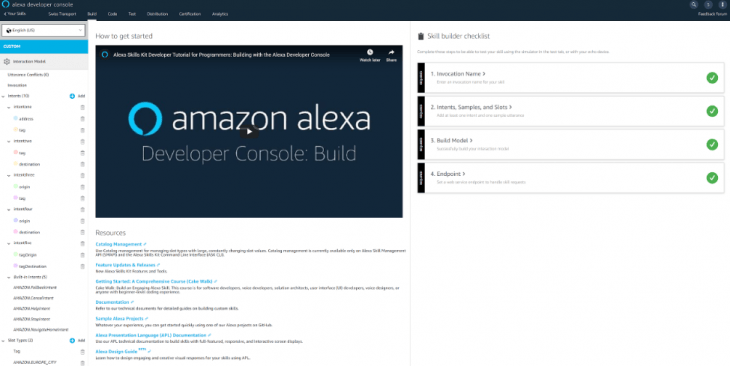

There are three main technologies to get familiar with in order to build and test an Alexa skill. First, the Alexa Skills kit. Here you can write phrases for Alexa to listen for by categorising them by “intent”. Each intent will send a message to the backend telling it which intent was called.

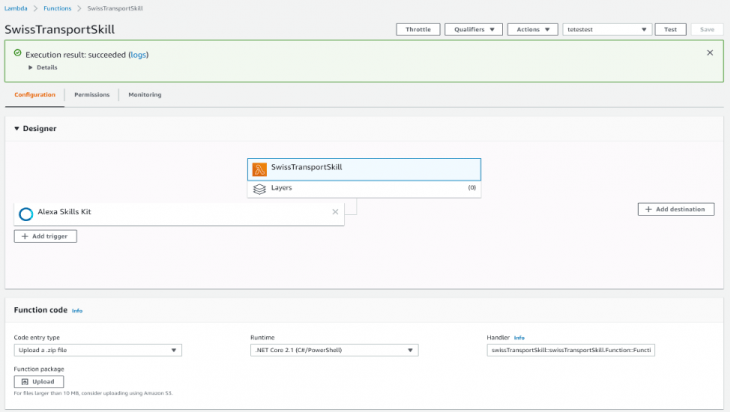

So, how does it get to the backend? Well, if you are using C# as I did, then you are linked through a handy tool called AWS Lambda (Amazon Web Services), which is the second element you need to get familiar with. Uploading a zip of your published code here will allow the Alexa Skills kit to send its message to your code for handling. You may also examine the logs here to see if anything has gone wrong. The uploading process is very quick and clean, allowing for frequent trial and error without much wasted time waiting on approval for code submissions etc.

The third technology you should know is Dynamo DB. This is the database system associated with Alexa Skills and AWS Lambda links you to it. Dynamo DB is a NoSQL database, meaning you don't have to have a defined structure to your database before submitting data. As a result, it’s pretty easy to use and submit, and you can view the contents easily through the AWS page.

After you set up your voice skill, you definitely need to test it. Our colleague Neha wrote a detailed article on how to perform software tests for voice apps. She talked about challenges, manual testing drawbacks and the strengths of test automation for voice.